Abstract

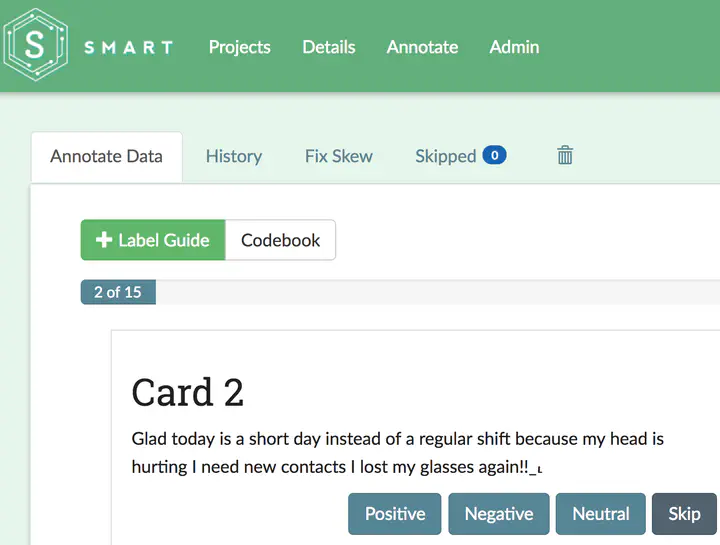

As many in the AI field transition from a model-centric to a data-centric approach, the quality of training data is receiving increased attention. As many in the AI field transition from a model-centric to a data-centric approach, the quality of training data is receiving increased attention. We bring together literature from the fields of social psychology, survey methodology, and machine learning to develop hypotheses about the factors that influence the quality of the data collected from annotators. We find evidence that the way in which the labeling task is structured, the order in which tasks are presented, and who works as annotators all influence the accuracy of the labels collected. Our findings have implications for how labels are collected and ultimately for the efficiency and accuracy of machine learning models.